2024 AI Trends: Transforming Creativity with the 6 Best AI Tools

The dawn of 2024 brings with it a surge of AI trends that are reshaping the creative industries. As artificial intelligence becomes more integrated into our daily lives, its influence on creativity is more profound than ever. This year, expect to see a host of AI-powered tools that not only streamline creative workflows but also introduce new methods of artistic expression. These tools, carefully curated for their innovation and utility, promise to add a fresh spark to your creative endeavors. Join us as we explore the cutting-edge trends and tools that will define the creative landscape in 2024.

Best 6 Next-Gen AI Tools

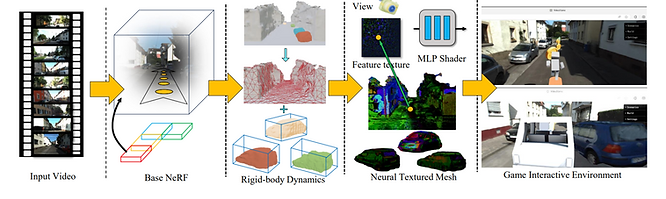

1. Video2Game

Video2Game introduces a groundbreaking AI approach that converts videos of real-world scenes into realistic and interactive game environments. The system features three essential components: (1) a neural radiance fields (NeRF) module to capture the scene’s geometry and appearance; (2) a mesh module that enhances NeRF data for quicker rendering; and (3) a physics module that replicates the interactions and physical behaviors of objects.

This detailed pipeline enables users to construct digital replicas of real-world environments that are both interactive and actionable. Benchmarked on various indoor and outdoor scenes, the system demonstrates its ability to deliver real-time, highly realistic renderings and develop interactive games. This AI trend signifies a significant step forward in the automation of virtual environment creation.

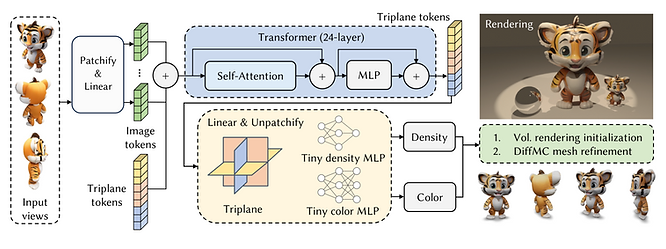

2. MeshLRM

MeshLRM is a pioneering AI technology that offers an LRM-based approach for reconstructing high-quality meshes from only four input images in less than one second. Unlike traditional large reconstruction models (LRMs) that focus on NeRF-based reconstruction, MeshLRM incorporates differentiable mesh extraction and rendering within its framework. This integration facilitates end-to-end mesh reconstruction by fine-tuning a pre-trained NeRF LRM with mesh rendering.

The LRM architecture has been enhanced by simplifying several complex features from previous models. MeshLRM’s sequential training with low- and high-resolution images accelerates convergence and improves quality while reducing computational demands. This approach achieves top-tier mesh reconstruction from sparse-view inputs and supports numerous downstream applications, such as text-to-3D and single-image-to-3D generation, reflecting the latest generative AI trends.

Learn More: Top 6 AI 3D Model Generators: Explore New Dimensions of Creativity

3. Open-Sora

Open-Sora is an open-source text-to-video AI model that adopts open-source principles to democratize advanced video generation techniques, offering a straightforward platform that simplifies the intricacies of video production. It supports generating videos across various resolutions (from 144p to 720p) and aspect ratios, meeting a wide range of project demands. Additionally, Open-Sora’s functionality extends to high-quality image generation, enabling effortless creation of both videos and still images. Its reproduction scheme, based on Stable Video Diffusion (SVD), involves three phases: large-scale image pre-training, large-scale video pre-training, and high-quality video data fine-tuning.

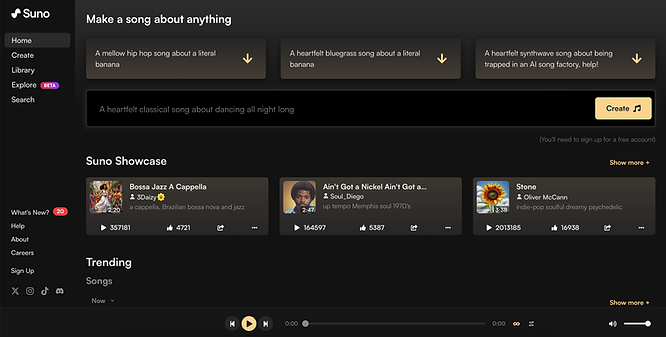

4. Suno AI

Suno AI is an innovative AI music generator that creates custom songs based on text prompts, leveraging its two main AI models, Bark and Chirp. With just a text prompt, users can experience a new AI development in creative expression, as Bark and Chirp collaborate to produce both vocal and non-vocal elements of a song. Bark, specializing in vocal generation, adds melodies to the lyrics, while Chirp, focusing on non-vocal components, crafts intricate musical arrangements. Together, they create a harmonious blend that showcases the capabilities of AI developments in crafting personalized musical pieces.

5. GPT-4o

GPT-4o has been developed to improve the accessibility and usefulness of advanced AI globally by enhancing language capabilities in both quality and speed. ChatGPT now supports over 50 languages for sign-up, login, and user settings. Users can initiate voice conversations with ChatGPT directly from their computers, beginning with the initial Voice Mode, and upcoming audio and video capabilities from GPT-4o.

The “omni” feature of GPT-4o allows it to accept text, audio, image, and video inputs, and generate text, audio, and image outputs. It responds to audio inputs in as little as 232 milliseconds, averaging 320 milliseconds, comparable to human conversation response times. GPT-4o matches GPT-4 Turbo’s performance in English and coding, with notable improvements in non-English languages, offering faster and more cost-effective API usage. It also outperforms existing models in vision and audio understanding.

6. VIVERSE for Business

VIVERSE for Business, nominated for the AWE 2024 Auggie Awards for Best Use of AI, promotes spatial collaboration through advanced AI features like Speech-to-Text and real-time translation. The technology ensures precise translation of speech into multiple languages and automates the production and email distribution of meeting summaries with action items. These enhancements help participants stay focused on discussions, increasing engagement and interaction within extended reality (XR) environments. By facilitating global communication and simplifying documentation management, VIVERSE for Business improves workflow efficiency and supports the integration of VR, AR, and MR technologies.

Learn More: The Must-try AI Avatar Generators: Step-by-step Guide

Final Thoughts

In conclusion, as we navigate through 2024, the integration of next-generation AI tools into creative workflows marks a transformative era. These tools not only enhance efficiency and productivity but also ignite new waves of artistic innovation. By harnessing AI’s capabilities in generating music, art, and immersive experiences, creators are poised to explore realms previously unimaginable. Embracing these advancements promises to redefine creativity itself, ushering in a future where human ingenuity converges harmoniously with artificial intelligence.

Curious about how VIVERSE for Business can benefit your organization or prefer a one-on-one discussion? Reach out to us at info_viverse@htc.com to arrange a meeting.